Over the last few weeks, a lot of enthusiasm about the state of “Artificial Intelligence” (AI) radiated from the popular press as a result of AlphaGo’s win over the current world champion in Go, Lee Sedol. I remember a similar spark in the AI hype gauge when DeepBlue won against Garry Kasparov. The reality, however, is that we are very far from building truly intelligent software. Many experts agree and have tried to manage down the generated hype.

“However, we’re still a long way from a machine that can learn to flexibly perform the full range of intellectual tasks a human can—the hallmark of true artificial general intelligence.”

Source: “What we learned in Seoul with AlphaGo” – Demis Hassabis, CEO and Co-Founder of DeepMind

Few days ago we were treated with an “intelligent” chat bot called “Tay” from Microsoft. “Twitter taught [it] to be a racist asshole in less than a day“. A coordinated attack demonstrated how easy it is for Machine Learning-based software to fall over. This is because we are still lacking the ability to teach computers how to reason over basic philosophical concepts. That’s not to say that we can’t have a bot with racist personality. If that’s how it’s “brought up”, if those are the “values” that is given, then that’s fine.

We still have lots of work to do in order to put such concepts in place. I applaud Microsoft for trying. Yes, they had an issue with their system which allowed attackers to move the focus away from the real goal of the experiment. I hope Microsoft returns to the experiment once a fix is in place. Building self-evolving, self-learning systems is an important step towards the advancement of such “intelligent” experiences.

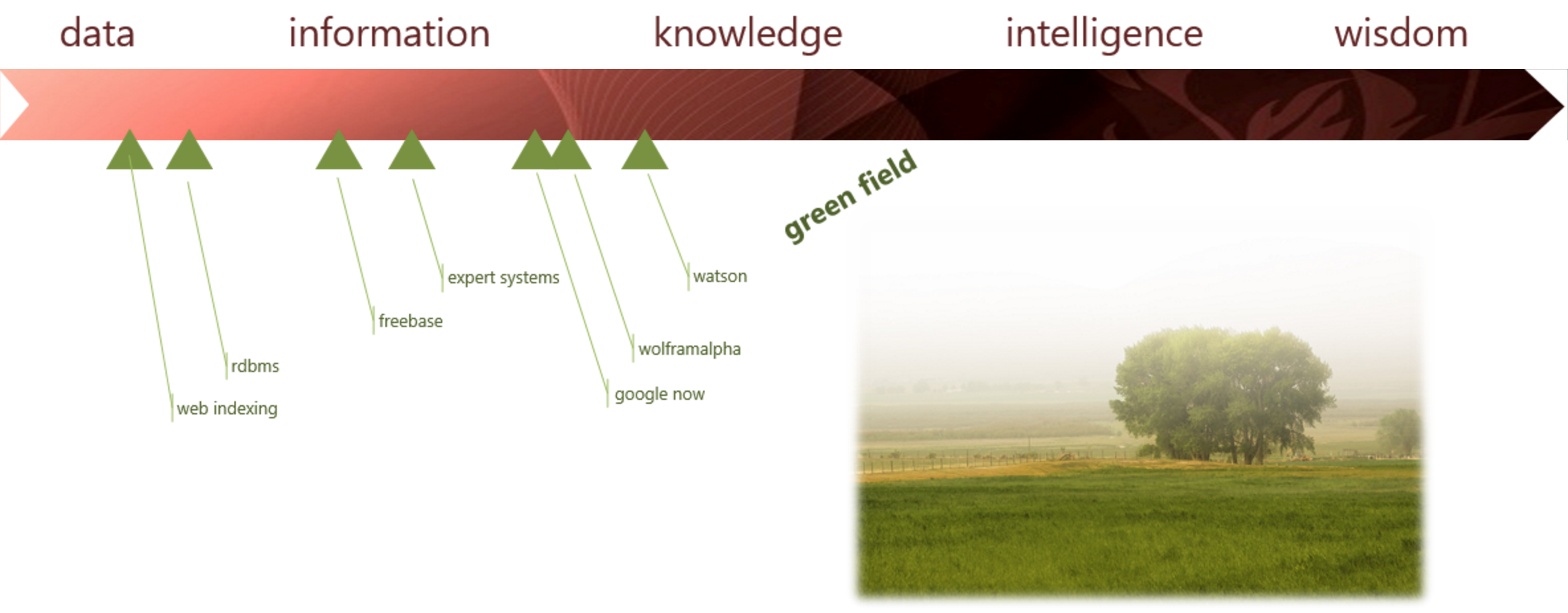

The situation reminded me of a slide I have been using for 6-7 years now in some of my presentations. Here’s a copy of it from my 2013 QCon presentation on “A Platform for all that we know”. (Variations of this spectrum have been used extensively in the literature. I am not the one who came up with it.)

We are far away from building truly intelligent experiences.

Perhaps the “A” in “AI” should stand for “Aspirational”, at least for few more years 🙂

2 responses to “The “A” in AI should stand for “Aspirational””

I understand your thesis, but viewing your example timeline, why would you say we are “very far away”? The time between your first achieved milestone and your last achieved milestone is, let’s say, 15 years. Even if one stipulates there is an overall increase in complexity at each milestone, this is being offset by matched increases in compute & storage power, available (meta)data to mine, advancements in algorithms, etc. One should also be careful not to anthropomorphize and romanticize words like “intelligence” and “wisdom” – unless you believe in the direct intervention of a deity, mandatory quantum effects that we don’t understand, etc., intelligence and wisdom are simply operations on data after all. Given that “wisdom” just comes down to memoized meta-intelligence — being able to come to conclusions based on past experience without making the same mistakes, and knowing what lessons to apply in a analogous situation — as computers have virtually infinite memories and can share experience instantly among individuals, I fear computers will be quickly more “wise” than we are. It is only because our brains are primarily pointed out toward the world and not inwards (a limitation also not inherent in an intelligent computing system), that we think intelligence, emotions, etc., are “special”.

Michael,

First my apologies for not seeing your comment sooner. My wordpress installation thought it was “spam” so I was never notified.

The spectrum on my slide is just a mere visualization of the increasing complexity I believe is associated with the work to bring “wisdom” to the experiences we build.

It’s the area in which I have decided to focus my career so I am more optimistic than my post might suggest. I am merely trying to tone down popular perception about the current state of AI as that might be formed by the popular press.